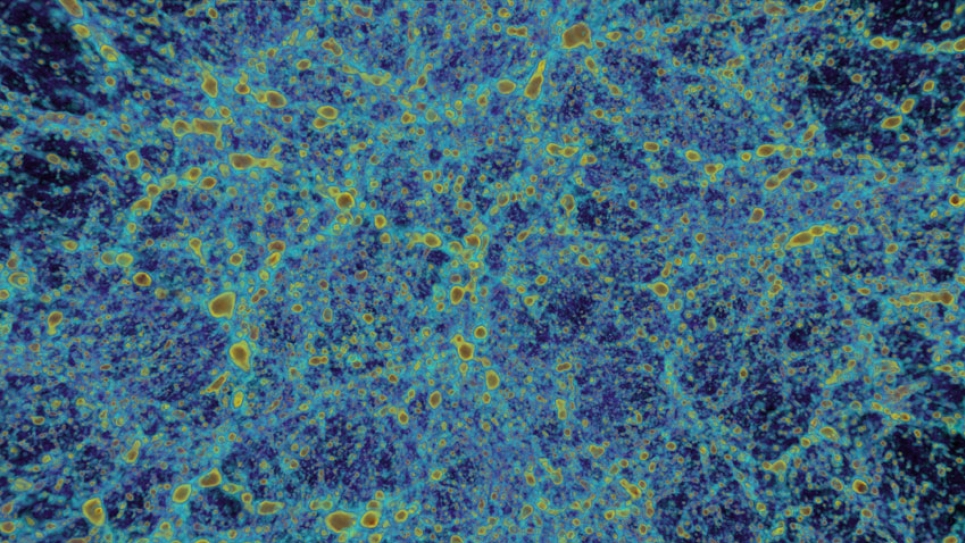

Argonne Scientists Probe the Cosmic Structure of the Dark Universe

Cosmology—the science of the origin and development of the Universe—is entering one of its most scientifically exciting phases. Two decades of surveying the sky have culminated in the celebrated Cosmological Standard Model. While the model describes current observations to accuracies of several percent, two of its key pillars, dark matter and dark energy—together accounting for 95% of the mass energy of the Universe—remain mysterious.

To assist in dispelling the mystery, sky survey capabilities are being spectacularly improved. Next-generation observatories will open new routes to understanding the true nature of the “Dark Universe.” However, interpreting future observations is impossible without a theory, modeling, and simulation effort fully as revolutionary as the new surveys.

A team of researchers led by Argonne National Laboratory’s Salman Habib and co-PI Katrin Heitmann is carrying out some of the largest high-resolution simulations of the distribution of matter in the Universe. The researchers are resolving galaxy-scale mass concentrations over observational volumes representative of state-of-the-art sky surveys by using Mira, a petascale supercomputer at the Argonne Leadership Computing Facility (ALCF). A key aspect of the project involves developing a major simulation suite covering approximately 100 different cosmologies—an essential resource for interpreting next-generation observations. This initiative targets an approximately two- to three-orders-of-magnitude improvement over currently available resources.

A paper on the team's research, "The Universe at Extreme Scale: Multi-Petaflop Sky Simulation on the BG/Q," earned a finalist designation for the ACM Gordon Bell Prize and will be included in the Gordon Bell Prize sessions at SC12.

“Surveys are getting so good now that we have to make predictions accurate to a sub-percent level, which is just unbelievable,” notes Dr. Habib. “The theory must keep pace with the observations. Supercomputers enable us to build as accurate a picture of the Universe as possible so we can compare it to these remarkable results.”

The simulation program is based around the new HACC (Hardware/Hybrid Accelerated Cosmology Code) framework aimed at exploiting emerging supercomputer architectures such as the IBM Blue Gene/Q at the ALCF. HACC is the first (and currently the only) large-scale cosmology code suite worldwide that can run at scale and beyond on all available supercomputer architectures. To achieve this versatility, the researchers essentially built the code from scratch. They have completed the porting of the code to the BG/Q, and the code is running extremely well.

HACC is designed to be modular so that pieces of it can be optimized for performance separately. On the BG/Q, the critical kernel was the short-range force solver that takes up roughly three-quarters of the code workload. “We worked closely with the Performance Engineering group at the ALCF,” said Dr. Habib. “We were responsible for the algorithms, and the ALCF group led the optimization of the code, especially for the short-range force kernel. That was a very big help.”

The set of simulations being produced will generate a unique resource for cosmological research. The database created from this project will be an essential component of Dark Universe science for years to come.