GLEANing Scientific Insights More Quickly

Moving data around in a supercomputer can be comparable to getting around in a big city: there’s traffic to navigate, multiple routes to consider, and shortcuts to help reduce travel time.

And as supercomputer technology continues to advance with increasingly complex architectures, the infrastructure for data movement is only getting more challenging to traverse.

Researchers at the Argonne Leadership Computing Facility (ALCF) are developing a software tool, called GLEAN, to help users optimize data movement between the compute, analysis, and storage resources of high-performance computing systems. This speeds the computer’s ability to read and write data, also known as input/output (I/O) performance, giving researchers a faster path to scientific insights.

“GLEAN is kind of like a Google Maps for supercomputers -- it helps the application to understand how the underlying infrastructure is connected and then determines the best route for data to get from point A to point B,” said Venkatram Vishwanath, Argonne computer scientist and lead developer of GLEAN.

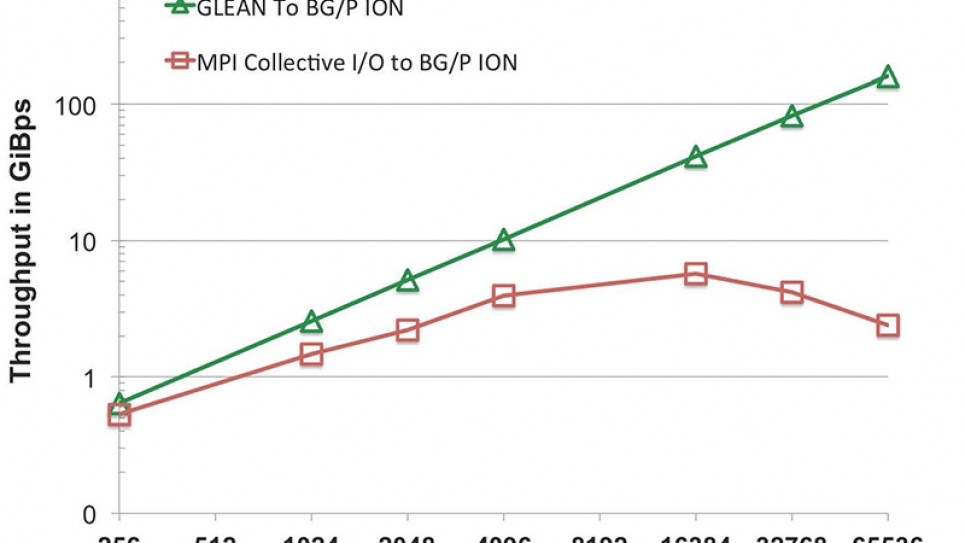

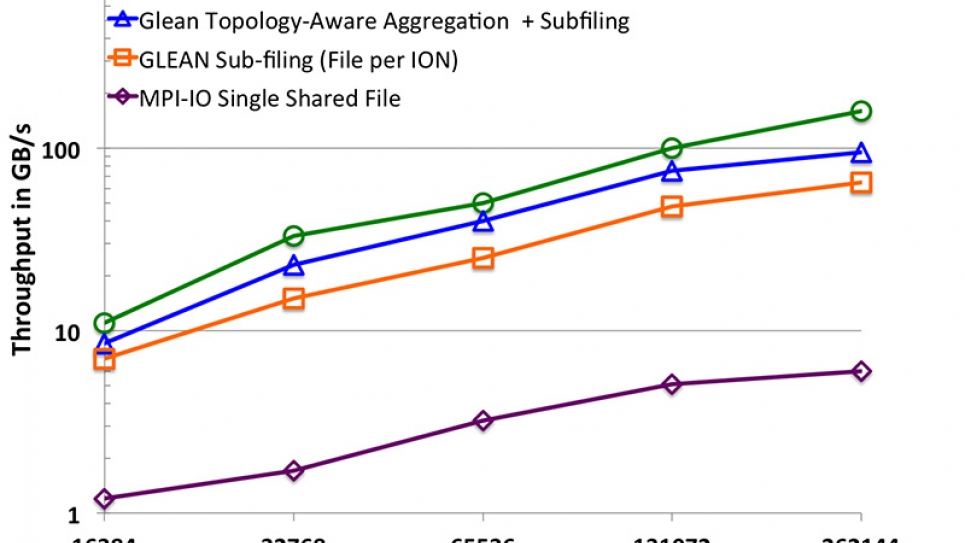

The U.S. Department of Energy’s leadership-class supercomputers support hundreds of computationally intensive research projects. As the computing power of these machines continues to increase, so does the size of the datasets they produce for researchers to analyze. But the development of I/O and storage capabilities to handle these larger datasets is not keeping pace with the leaps being made in compute power. This imbalance has caused I/O to become a significant bottleneck for computing applications, slowing the pace of scientific discovery that is possible with modern supercomputers.

“We are developing GLEAN to address the I/O bottleneck and provide a tool that will help ALCF users get the most out of our machines,” Vishwanath said.

In addition to enabling smarter and faster data movement, GLEAN improves I/O performance by leveraging the data semantics of applications. With knowledge of an application’s specific data model, GLEAN can be used to perform real-time data analysis and visualization during large-scale simulation runs. Known as in situ analysis, this approach allows researchers to quickly analyze data on the fly, giving them flexibility to pursue new questions that arise.

Asynchronous data staging is another key capability of GLEAN. Instead of writing I/O data directly to storage, this feature allows data to be written to the memory of a certain set of nodes. “Writing to memory is faster than writing to storage. And once it’s written to the memory of those nodes, the simulation can progress ahead,” said Vishwanath.

Vishwanath and his colleagues are working with ALCF users, including researchers at Argonne, the University of Chicago’s FLASH Center for Computational Science, and the University of Colorado to demonstrate how GLEAN can help resolve the I/O bottleneck issue with their scientific applications.

For example, with HACC, Argonne’s large-scale cosmology code, GLEAN allowed the team to achieve a nearly tenfold performance improvement over the code’s previous I/O mechanism, paving the way for some of the most detailed and largest-scale simulations of the universe ever performed.

“We expect GLEAN to be an important tool for the current generation of data-centric supercomputing and also provide insight for the design of I/O architectures for future exascale systems,” said Vishwanath.

GLEAN is being developed as an open source software tool. For more information, contact Vishwanath at venkat@anl.gov.