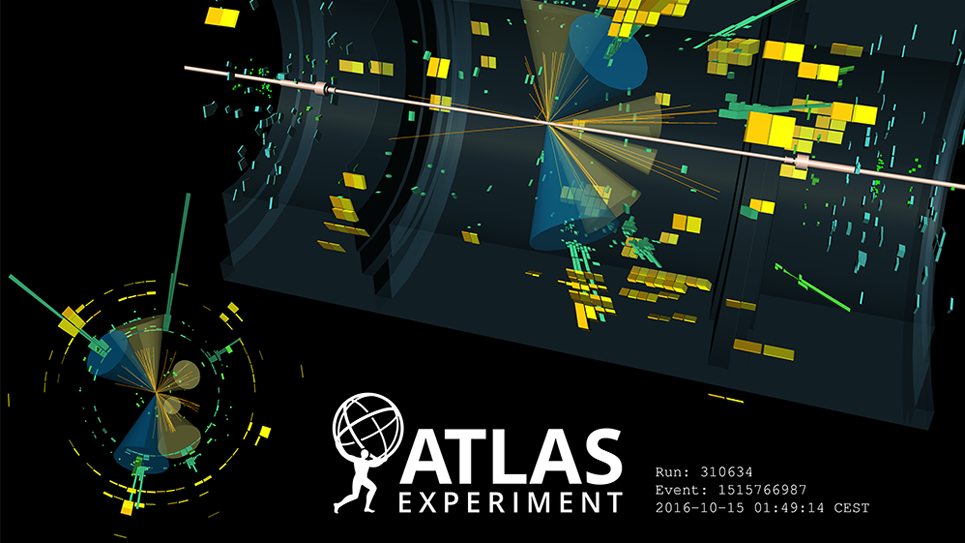

A candidate event in which a Higgs boson is produced in conjunction with top and anti-top quarks which decay to jets of particles. The challenge is to identify and reconstruct this type of event in the presence of background processes with similar signatures which are thousands of times more likely. (Image: CERN)