Adventures in parallelism: Celebrating 30 years of parallel computing at Argonne

Were they visionaries or lucky gamblers? Thirty years ago, researchers at the U.S. Department of Energy’s Argonne National Laboratory established the lab’s first experimental parallel computing facility. Today, high-performance computing has become essential to virtually all science and engineering, and Argonne houses one of the fastest parallel machines in the world in support of scientific discovery.

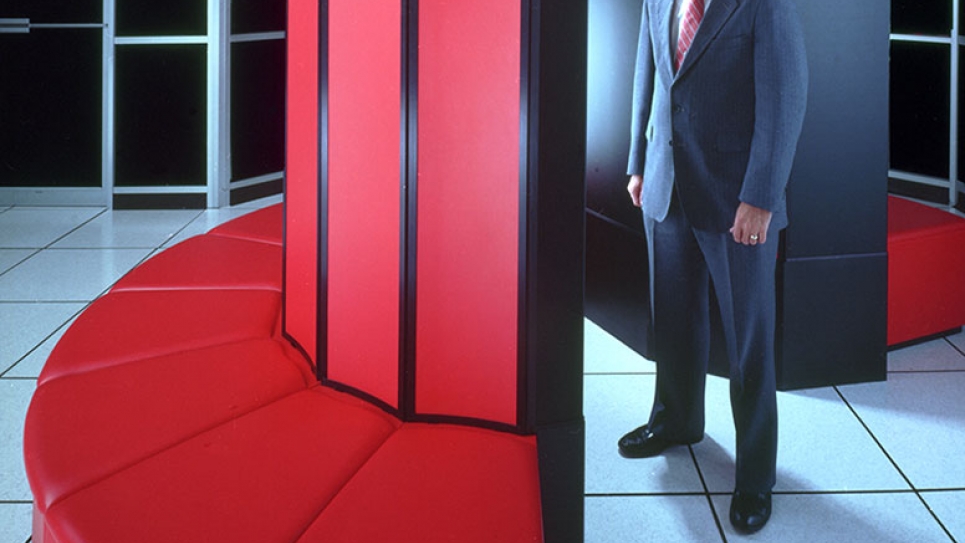

The early 1980s saw a gradual but seismic shift in computing architectures – from traditional serial machines, which relied on a single fast processor to work on problems, to a model that employed many not-so-fast processors ganged together. Programming parallel computers was more difficult, but the projected benefits made the effort worthwhile. Over time, researchers figured out ways to use parallel machines for essentially all types of scientific applications.

The rise of parallel computing was an ideological departure from the multi-million-dollar mode of supercomputing, in that it achieved more computing power by stringing together hundreds – and eventually hundreds of thousands – of microprocessors, all running calculations at the same time, or “in parallel.”

These tiny and powerful components coupled with memory and an interconnect were the building blocks of a new computing paradigm, attracting the attention of the computing engineering community and leading to an explosion of different parallel computing architectures in the span of a decade.

In response, believing parallel computing to be the only realistic way to continue scaling up in power, Argonne established the Advanced Computing Research Facility (ACRF) in 1983. Housing as many as 10 radically different parallel computer designs, the ACRF enabled applied mathematicians and computer scientists to learn parallel computing and experiment with virtually every emerging parallel architecture. Moreover, the ACRF provided a platform for collaborations with industry, academia and other government research laboratories. Using the diverse computers in the ACRF, investigators developed new techniques to exploit parallelism in scientific research. Emphasis was given to the interaction between algorithms, the software environment and advanced computer architectures; the portability of programs without a sacrifice in performance; and the design of parallel programming tools and languages.

By 1991, with computational science explicitly recognized as a new paradigm for scientific investigation, the U.S. government inaugurated the first major federal program to develop the hardware, software and human resources needed to solve “Grand Challenge” problems.

Again, Argonne was at the forefront. Building on its experience with parallel computing, the laboratory acquired an IBM SP – the first scalable, parallel system to offer multiple levels of I/O capability (the speed to read and write data) essential for increasingly complex scientific applications. By 1997, Argonne's supercomputing center was recognized by the U.S. Department of Energy as one of the nation's four high-end resource providers. Working with IBM, Argonne researchers explored tools, software and I/O systems, with the objective of ensuring that researchers could exploit the full potential of the SP. Workshops also were held for those using the SP system for scientific applications – enzyme reaction simulation, Monte Carlo nuclear physics calculations and climate modeling, for example.

Argonne’s most recent foray into production computing began in 2004 when Argonne formed the Blue Gene Consortium with IBM and other national laboratories. The objective was to design, evaluate and develop code for a series of massively parallel computers. The following year saw the establishment of the Argonne Leadership Computing Facility (ALCF) and the installation of a 5-teraflops IBM Blue Gene/L and, in 2007, a 557-teraflops Blue Gene/P.

ALCF computational scientists and performance engineers work closely with Argonne’s Mathematics and Computer Science Division – the seedbed for such groundbreaking software as BLAS3, p4, ADIFOR, PETSc and MPICH. The ALCF’s Blue Gene system principally supports projects through the DOE’s Innovative and Novel Computational Impact on Theory and Experiment (INCITE) program. This year, the ALCF is committed to delivering over two billion core-hours on Mira, its newly installed IBM Blue Gene/Q machine, which is already in full production mode.

On May 14-15, Argonne will host many of the visionaries at the lab and at other institutions who initiated and contributed to Argonne’s history of advancing parallel computing and computational science. The tradition continues as Argonne explores new paths and paves the way toward exascale computing. Please check the agenda on the event website to view the presentations.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation's first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America's scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy's Office of Science.