ALCF supercomputers help address LHC’s growing computing needs

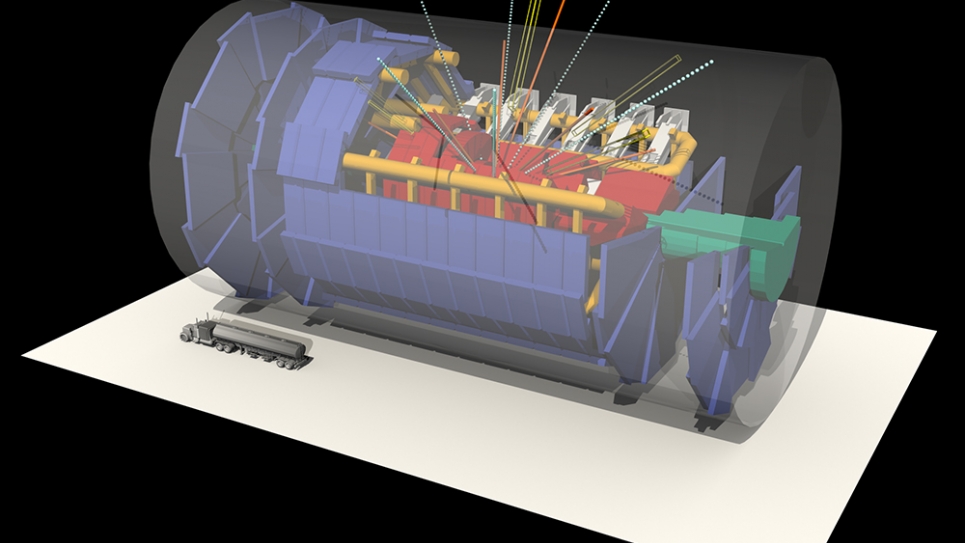

Particle collision experiments at CERN’s Large Hadron Collider (LHC)—the world’s largest particle accelerator—will generate around 50 petabytes (50 million gigabytes) of data this year that must be processed and analyzed to aid in the facility’s search for new physics discoveries.

That already-massive amount of data is expected to grow significantly when CERN transitions to the High-Luminosity LHC, a facility upgrade being carried out now for operations planned in 2026.

“With the High-Luminosity LHC, we’re expecting 20 times the data that are produced by today’s LHC runs,” said Taylor Childers, a physicist at the U.S. Department of Energy’s (DOE) Argonne National Laboratory and a member of the ATLAS experiment, one of the four particle detectors at the LHC. “Based on our best estimates, we’ll need about a factor of 10 increase in computing resources to handle the increased amount of data.”

Childers is part of a team exploring the use of supercomputers at the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science User Facility, to help meet the LHC’s growing computing demands. His collaborators include Argonne physicist Thomas LeCompte, ALCF computer scientist Tom Uram, and Duke University researcher Doug Benjamin.

Since 2002, scientists at the LHC, located on the border of France and Switzerland, have relied on the Worldwide LHC Computing Grid for all their data processing and simulation needs. The grid is an international distributed computing infrastructure that links 170 computing centers across 42 countries, allowing data to be accessed and analyzed in near real-time by an international community of more than 10,000 physicists working on the LHC’s four major experiments.

The LHC Computing Grid continues to serve the community well, but through their work at the ALCF, Childers and his colleagues are showing that supercomputers can serve as a powerful complement in the data simulation and analysis space. The team is using the ALCF's petascale systems to demonstrate their ability to perform increasingly precise simulations, as well as calculations that are too intensive for traditional computing resources.

“By enabling a portion of the LHC grid’s workload to run on ALCF supercomputers, we can speed the production of simulation results, which will accelerate our efforts to search for evidence of new particles,” Childers said.

Over the past few years, the team has been using Mira, the ALCF’s 10-petaflops IBM Blue Gene/Q supercomputer, to carry out calculations for the ATLAS experiment as part of an ASCR Leadership Computing Challenge (ALCC) project. Working with ALCF researchers, they scaled Alpgen, a Monte Carlo-based event generator, to run efficiently on the system, enabling the simulation of millions of particle collision events in parallel, while freeing the LHC Computing Grid to run other, less compute-intensive jobs.

The team is now working to bring Theta, the ALCF’s new Intel-Cray system, into the fold through an allocation from the ALCF Data Science Program (ADSP), a pioneering initiative designed to explore and improve computational and data science methods that will help researchers gain insights into very large datasets produced by experimental, simulation, or observational methods.

For this effort, the ADSP team is migrating and optimizing the ATLAS collaboration’s research framework and production workflows to run on Theta. Their goal is to create an end-to-end workflow on ALCF computing resources that is capable of handling the ATLAS experiment’s intensive computing tasks—event generation, detector simulations, reconstruction, and analysis.

As a first step, the ALCF is working to deploy HTCondor-CE, a “gateway” software tool developed by the Open Science Grid to authorize remote users and provide a resource provisioning service. HTCondor-CE has already been installed on Cooley, the ALCF’s visualization and analysis cluster, but work continues to get it up and running on Theta. This effort requires writing code to allow HTCondor-CE to interact with the ALCF’s job scheduler, Cobalt, as well as making modifications to the facility’s authentication policy.

“With HTCondor-CE, the researchers will be able to run simulations automatically through their workflow management system,” said Uram. “The interface will simplify the integration of ALCF resources as production compute endpoints for projects that have complex workflows like ATLAS.”

To test the HTCondor-CE setup, the ADSP team has successfully carried out end-to-end production jobs on Cooley, paving the way for larger runs on Theta in the near future.

Once the simulation workflow is in place, ATLAS researchers will benefit greatly from the ability to carry out multiple computing steps at one facility. With this approach, they will no longer have to store intermediate data, which is necessary when carrying out different simulation steps at different locations. The ALCF workflow will also reduce compute time by bypassing the setup and finalization time that is required for each step when performed independently.

The team is planning to extend this workflow to their ongoing work with DOE computing resources at the Oak Ridge Leadership Computing Facility at Oak Ridge National Laboratory and the National Energy Research Scientific Computing Center at Lawrence Berkeley National Laboratory, both DOE Office of Science User Facilities.

This research is supported by the DOE Office of Science. Some early funding was provided by DOE’s High Energy Physics – Center for Computational Excellence (HEP-CCE).

This work was also featured in the September/October issue of Computing in Science & Engineering.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation's first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America's scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy's Office of Science.

The U.S. Department of Energy's Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit the Office of Science website.