Aurora

Aurora: Advancing Science with Exascale Simulation, AI, and Data Capabilities

The ALCF’s Aurora exascale supercomputer provides researchers with a powerful platform to pursue scientific discovery at unprecedented scale and speed. Designed to support open scientific research across large-scale simulation, AI, and data analysis, Aurora brings these capabilities together within a single system to enable integrated scientific workflows.

Developed in partnership with Intel and Hewlett Packard Enterprise and launched in January 2025, Aurora combines more than 60,000 GPUs with advanced compute, networking, and storage technologies. This architecture supports research campaigns that span high-fidelity modeling of complex physical systems, large-scale AI training and inference, and the analysis of massive experimental and observational datasets.

Aurora’s design delivers the computational performance, memory bandwidth, and data processing capabilities needed to capture multiscale phenomena, support demanding AI workloads, and carry out in situ and data-intensive analysis. Together, these capabilities are enabling research teams to explore questions with greater fidelity and efficiency than was possible on previous-generation supercomputers.

Enabling Breakthrough Research

Researchers are using Aurora to tackle complex problems across a range of diverse research fields. Examples include:

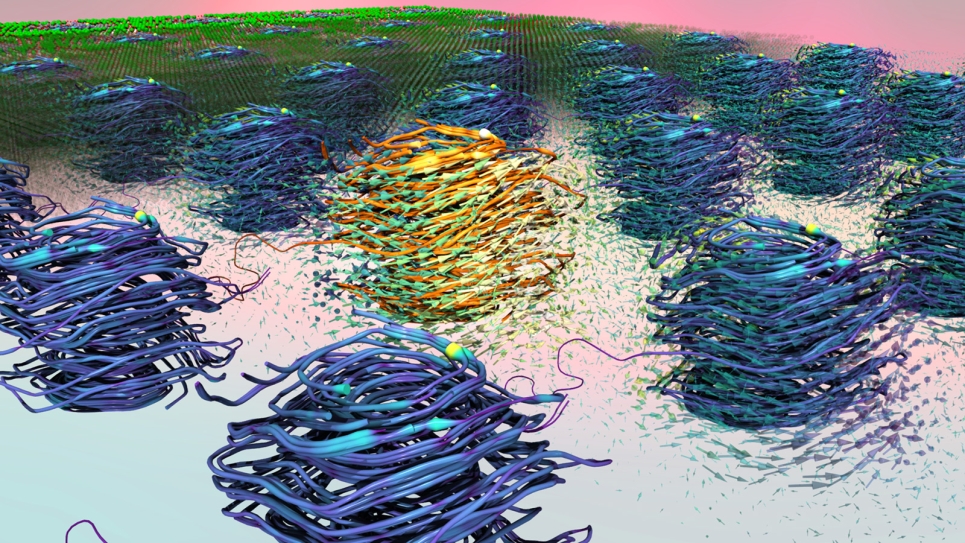

- Materials and Drug Discovery: Researchers use Aurora to explore vast molecular and material spaces through large-scale simulations and AI-driven modeling and prediction. These capabilities help accelerate the design and discovery of novel drugs and therapeutics to fight cancer and other diseases, as well as new materials for applications such as batteries, catalysts, and quantum computing.

- Energy Technologies: Developing next-generation nuclear reactors and fusion energy devices requires simulating extreme conditions and predicting system behavior. Aurora's powerful simulation and AI capabilities enable researchers to model complex environments, optimize designs, and drive innovation in nuclear and fusion energy.

- Aerospace and Engineering: Solving complex engineering challenges requires highly detailed models of everything from aircraft components to manufacturing workflows. Researchers use Aurora to simulate scenarios that are impossible or too costly to test experimentally, helping predict performance, optimize designs, and streamline technology development.

- Cosmology and Astrophysics: Aurora enables scientists to study supernova explosions, the expansion of the universe, and other cosmological phenomena that cannot be recreated in a laboratory. These efforts help advance our understanding of observations from state-of-the-art telescopes, shedding new light on the mysterious nature of the universe.

Aurora System Specifications

Collaborative Development: Building and Preparing Aurora for Science

Aurora was developed through a long-term co-design process that aligned hardware innovations with software and application needs, bringing together system architects, software developers, and scientific teams. Working closely with Intel and Hewlett Packard Enterprise, the process integrated state-of-the-art processor, accelerator, networking, and storage technologies to create a system capable of supporting science at unprecedented scale.

In parallel, researchers participating in DOE’s Exascale Computing Project and the ALCF’s Aurora Early Science Program ported, optimized, and tested scientific applications on early hardware and software environments. These efforts ensured that critical applications, libraries, and tools were ready when Aurora entered production.

This collaborative approach ensured Aurora was ready for science on day one of its deployment. The system is now supporting a wide range of projects awarded time through DOE’s INCITE and ALCC allocation programs.

Aurora Press Coverage

Aurora News from ALCF

Aurora Videos

Aurora Early Science Projects

Simulation

Data

Learning