Mira: An Engine for Discovery

Every day, researchers around the country are working to understand mysterious phenomena, from the origins of the universe to chemical processes deep within the Earth’s mantle. The enormity of these studies requires ever more sophisticated tools.

The advent of petascale supercomputers now allows these same researchers to conduct calculations and simulations on unprecedented scales, and the Argonne Leadership Computing Facility (ALCF) houses one of the fastest in the world. An IBM Blue Gene/Q supercomputer, Mira’s architecture is the result of a co-design collaboration between Argonne National Laboratory, Lawrence Livermore National Laboratory, and IBM.

Capable of carrying out 10 quadrillion calculations per second, Mira’s enormous computational power can be attributed to a symbiotic architecture that combines novel designs for fine- and coarse-grain parallelism with corresponding network and I/O capabilities that redefine standards in supercomputer processing and networking.

With the ability to employ 10 times as much RAM and 20 times as much peak speed in floating-point calculations, Mira has far exceeded the capabilities of its immediate predecessor Intrepid, an IBM Blue Gene/P.

“Mira’s innovative architecture is enabling simulations of unprecedented scale and accuracy, which allow scientists to tackle more complex problems and achieve faster times to solutions,” says Michael Papka, director of the ALCF. “Based on the early results being reported by Mira users, our new supercomputer will be a game changer for the entire research community.”

Already, Mira’s high-end hardware features are enabling scientists to take their research projects to new heights. This article discusses how the following projects are benefiting from various Blue Gene/Q architectural innovations.

- Scientists from the University of Texas at Austin are taking advantage of Mira’s node-level parallelism to study turbulent fluid flows in greater detail than ever before.

- A research team from the University of Colorado, Boulder is leveraging the Mira’s 5D torus interconnect to efficiently integrate a huge range of length and time scales in their flow control actuator simulations.

- A joint project with researchers from Brookhaven and Oak Ridge national laboratories is exploiting Mira’s L2 cache atomics to accelerate their studies of chemical phenomena associated with energy production and storage.

Architecture Elevated

A hyper jump in speed and power begins with the escalation of Mira’s node-level parallelism, which, in essence, increases the number of tasks a core can perform. A single compute card or node contains a 16-core processor chip and 16 GB of memory. Where each of the four cores on Intrepid could conduct only one task apiece, a feature called simultaneous multi-threading (SMT) allows Mira to run four simultaneous operations, or four threads of execution, per core.

This is further enhanced by the cooperation of quad processing extensions (QPX) which allow for simultaneous instruction multiple data (SIMD) operation on each thread. SIMD operations provide multiple computational results for the cost of a single result and allow for power-efficient numerical computing. In the case of Mira, this means eight floating-point operations are obtained with a single instruction.

Both features are considered fine-grained parallelism or concurrency, designed with an emphasis on maximizing performance while minimizing both the hardware and power cost of operation.

Many researchers who were given early access to the computer through the ALCF’s Early Science Program (ESP) agree that this kind of performance is key to detailed calculations necessary to model complex processes not achievable before Mira.

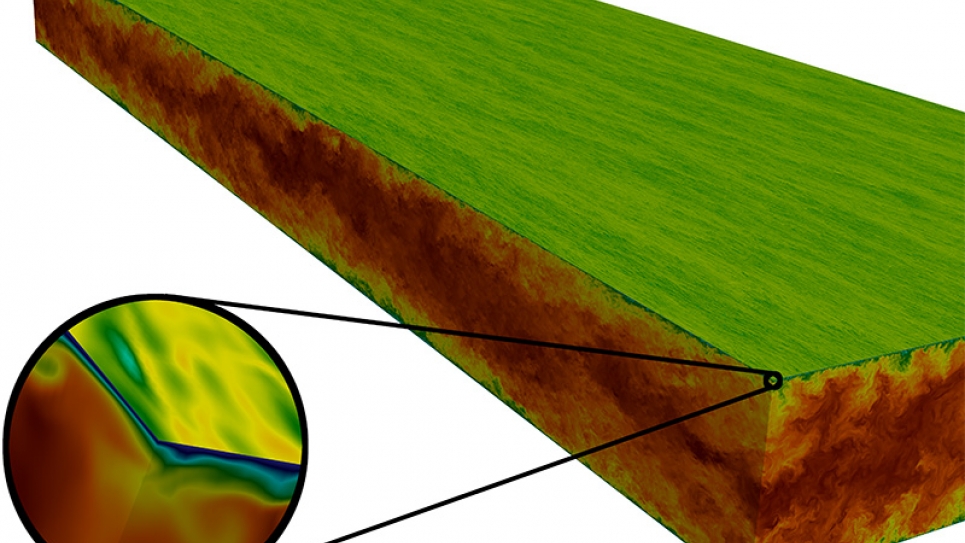

For example, researchers at the University of Texas at Austin are using these applications to realistically represent turbulent fluid flows.

“The reason is that such flows have high "Reynolds numbers," which in turbulence characterizes the range of spatial and temporal time scales that must be simulated,” says Robert Moser, professor and deputy director of the Institute of Computational Engineering and Sciences.

As a result, simulation sizes are extremely large. To solve such big problems, they must be broken up into smaller pieces that can be operated simultaneously on many processing cores. Moser’s simulations on Mira have used as many as 524,288 processing cores at once, or approximately two-thirds of the available cores.

Novel Networking

This type of high-level computing would not be achievable without an equally highly structured communications network that allows groups of nodes to receive and disseminate information quickly and with little interference or confusion.

Making this possible is the Blue Gene/Q’s 5D torus interconnect, a scalable network with the capacity to connect nearly 8.4 million cores. Designed to provide greater bandwidth and lower latency relative to a 3D torus, more information can be relayed simultaneously with less time between dispatch and delivery.

While trying to envision a five-dimensional network seems alien even to some of the engineers working with Mira, it’s easier to grasp that where the Blue Gene/P’s 3D torus connected six nodes simultaneously, the 5D version makes 10 direct connections to access information more quickly.

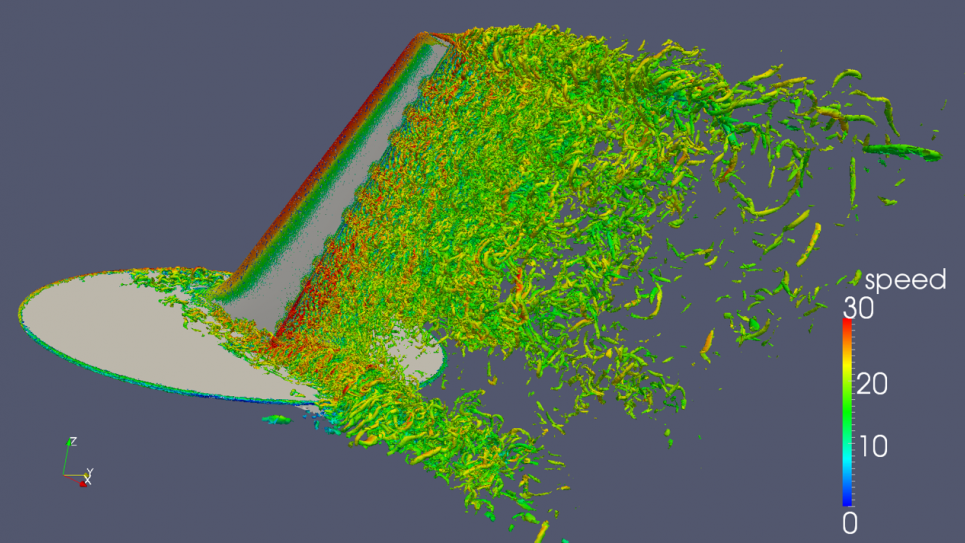

“Without a doubt, the 5D torus interconnect is critical,” says Ken Jansen, professor of aerospace engineering at the University of Colorado, Boulder. Jansen’s project, Petascale, Adaptive CFD (Computational Fluid Dynamics), was also part of ALCF’s ESP.

Jansen’s team is developing aerodynamic simulations to better understand how dynamic flow control actuators alter the turbulent flow in a way that improves performance. Not unlike Moser’s work, simulations of flow control actuators applied to real aeronautical flows generate a huge range of length and time scales which, notes Jansen, are much more efficiently integrated with implicit methods.

“These methods require the very fast global synchronizations across the whole machine that a 5D torus provides,” he adds.

The Nuts and Bolts: What You Don’t See Can Help You

Buttressing the main architecture is a cadre of custom features that help the user access and modify data more efficiently. The L1 cache prefetching and L2 cache atomics units are among these features that reduce the time it takes to access memory and synchronize threads within the node, respectively.

The L1 cache prefetcher, which includes both streaming and perfect prefetch, greatly reduces the time spent waiting for memory accesses. This is important for speed on a single core; the less time spent waiting for data, the better an application will run. While most systems have some inherent prefetch capability, Mira's is specifically tuned for the types of heavy workload applications run on the system. The L1 cache prefetcher tries—often successfully—to predict the data that the user needs next before it is actually requested so that it appears to be available immediately when the user requests it.

“The L2 cache atomics are magic,” says Jeff Hammond, an ALCF assistant computational scientist. “It’s one of the features I like the most.”

Atomic operations, which are used to ensure that data is read and written correctly when accessed by multiple threads simultaneously, are implemented in the L2 cache rather than at the processor core, which dramatically reduces their cost, notes Hammond, who programs models and communication software for Mira.

He is currently working on a project with researchers at Brookhaven and Oak Ridge National Laboratories that focuses on chemical phenomena associated with energy production and storage. Their code, MADNESS, is a multi-resolution numerical simulation framework that exploits a highly multi-threaded programming model that greatly benefits from lightweight synchronization mechanisms, such as L2 atomics. The combination is expected to make calculations run significantly faster and more efficiently.

The L2 memory cache also has been outfitted with additional state-of-the-art technologies. For example, transactional memory manages the concurrent use of multiple threads, protecting against conflicting operations in the atomic region and correcting for it. It is the first such hardware in a commercial computer.

The Foundation

IBM introduced many of these high-end hardware features into the Blue Gene/Q not expecting all users to actually use them, but rather to implement efficiently Mira’s primary network communication software, MPI. MPI makes extensive use of the L2 atomics, the QPX unit, and many of the network features, which gives the user the benefits of these features without having to program them directly.

Because MPI is internally multi-threaded, it is able to create collaborations between hardware applications like SMT, L2 cache atomics, and the network when it needs to accelerate communications.

“Ninety-nine percent of the way computational science is done in the world is done with MPI. And a large fraction of those codes, especially for the big machines, also use OpenMP,” notes Hammond.

“So the most effective way for ALCF to address the needs of computational science is to collaborate on a machine that delivers the maximum performance of MPI and OpenMP, and provide essential and innovative hardware features so that users can walk in on day one, compile their code, and immediately make effective use of Mira.”

The Blue Gene/Q system demonstrates the major benefits that can be gained from innovative architectural features that are developed with a deep understanding of scientific computing requirements. Such an approach is already leading researchers down the path toward exascale computing. Making it possible to perform computations a thousand times faster than today’s most powerful computers, these machines will truly prove themselves engines of discovery.