ALCF summer students gain hands-on experience with supercomputing and AI research projects

This summer, more than 40 undergraduate and graduate students worked alongside ALCF mentors on cutting-edge research projects, gaining hands-on experience in high-performance computing (HPC) and artificial intelligence (AI).

At the Argonne Leadership Computing Facility (ALCF), a U.S. Department of Energy (DOE) Office of Science user facility at DOE’s Argonne National Laboratory, summer brought a surge of energy as student researchers joined the lab to take on real-world challenges in high-performance computing (HPC), AI, and computational science. This year’s cohort brought enthusiasm to every corner of the lab, from seminars and poster sessions to hands-on research projects using some of the world’s most powerful supercomputers.

“Through our summer program, students have the unique opportunity to engage directly with our expert computing staff and contribute to innovative projects powered by supercomputing and AI technologies,” said Michael Papka, ALCF Director and professor of computer science at the University of Illinois Chicago. “This hands-on experience strengthens their technical abilities and equips them to excel in future roles in the rapidly evolving landscape of HPC and AI.”

This year, ALCF hosted over 40 students working on projects ranging from developing scalable AI infrastructure to exploring the fields of quantum computing and machine learning. We spoke with five students about their work and what they’ll take away from their time at Argonne.

Coupling machine learning with scientific computing through in situ frameworks

Ayman Yousef, a PhD candidate in the Randles Lab at Duke University’s Biomedical Engineering department, joined the ALCF as a research aide this summer after previously collaborating with the lab’s visualization team. His research focuses on in situ frameworks to augment large-scale computational solvers, with an eye toward enabling machine learning models to play more efficient, surrogate roles in scientific computing workflows.

Yousef’s summer project investigated how machine learning models can be more seamlessly integrated with data-generating scientific codes. “There is a continued need for frameworks that help streamline training and inference routines thanks to an explosion in the utilization of machine learning models,” Yousef said. Working alongside ALCF mentors, Yousef explored lightweight, modular approaches to in situ model training and inference using popular analysis APIs like ParaView and Catalyst.

Yousef’s work also ties into a broader effort to improve tools, tutorials, and documentation for the research community. “My work would be augmentative of this research, a case study on how existing tools can be co-opted for relevant in situ analysis and machine learning workflows,” he explained.

Yousef noted that one of the most impactful aspects of his experience was gaining exposure to the lab’s wide variety of ongoing projects. “I've been exposed to a vast number of projects researching science related to mine,” Yousef said. “This doesn't even begin to account for the projects outside of my research group, with Argonne's continued push in nuclear science, artificial intelligence, and digital twins.”

Looking ahead, Yousef hopes to translate this summer’s work into a future role as a machine learning engineer. “The experience of working at a national laboratory has given me the confidence to pursue opportunities I had previously deemed untenable,” he said.

Benchmarking distributed deep learning at scale

Musa Cim, a second-year PhD student in computer science at Penn State University, joined the ALCF this summer to develop DLcomm, a benchmarking framework for evaluating communication performance in distributed deep learning workloads. Cim’s project supports supercomputers like ALCF’s Aurora and Polaris systems by profiling key metrics such as latency, bandwidth, and scalability across different GPU communication libraries.

Cim was motivated by the chance to work with some of the world’s most advanced HPC systems. “Access to a system like Aurora is extremely rare for graduate students… thousands of nodes and tens of thousands of GPUs is almost unheard of today,” Cim said. The DLcomm framework assists users in pinpointing communication bottlenecks and fine-tuning backend settings to enhance performance on large-scale AI and simulation workloads.

Reflecting on the technical impact of the work, Cim noted, “My biggest takeaway is a deeper understanding of how communication backends interact with large-scale HPC infrastructure, and how seemingly small configuration changes can have a major impact on performance.”

The experience also reinforced Cim’s research direction in HPC and AI, and broadened his perspective on career paths in large-scale AI infrastructure and national laboratories.

Exploring the practicality of quantum computing for scientific challenges

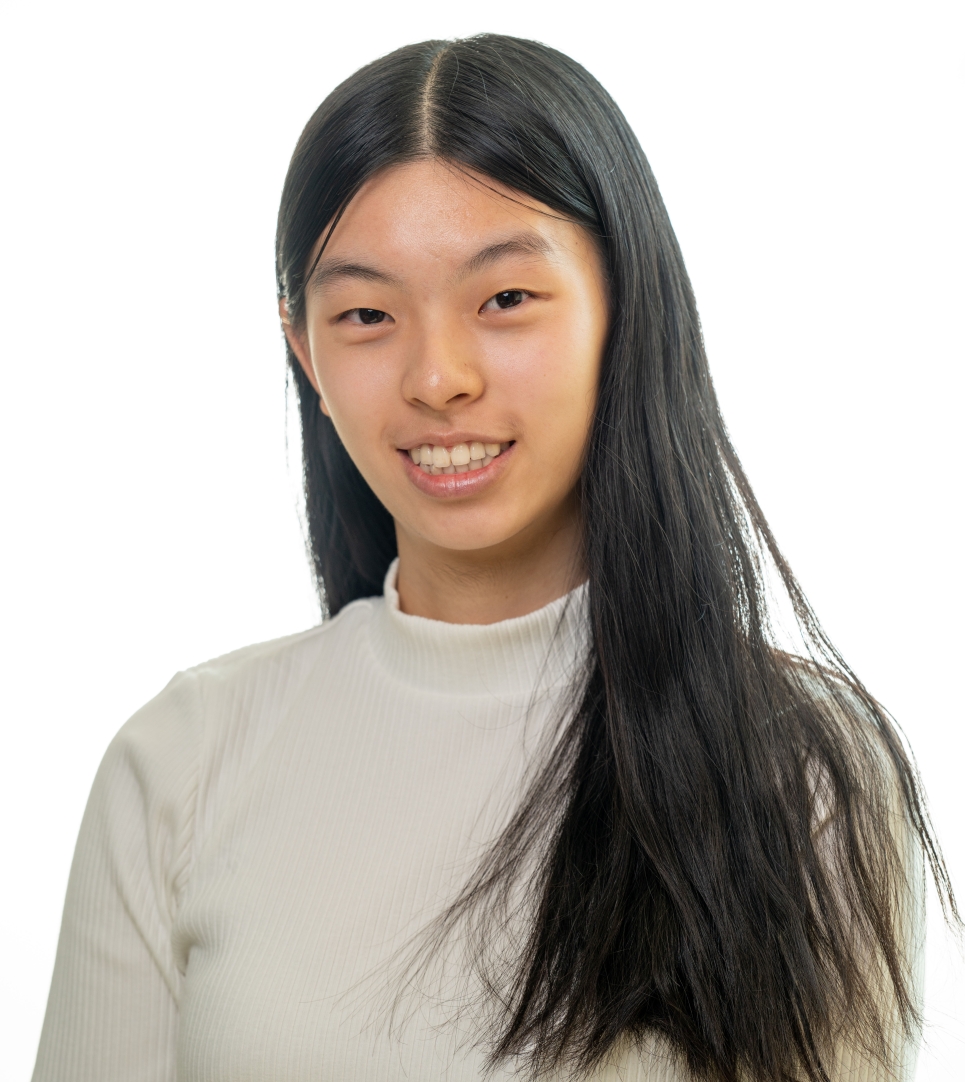

April Wang, a third-year undergraduate student at Northwestern University studying integrated science, computer science, and physics, joined the ALCF this summer through a DOE Science Undergraduate Laboratory Internship (SULI) to work on a project focused on quantum computing. Her research interests center on quantum computing algorithms and their applications, with an emphasis on interdisciplinary approaches.

Drawn by Argonne’s strong involvement in the Chicago Quantum Exchange and its robust computing resources, Wang said the opportunity aligned perfectly with her curiosity about quantum computing and other scientific fields.

Quantum computing holds promise for solving complex problems faster than classical computers, potentially expanding the range of scientific questions that facilities like ALCF can address. “By advancing our understanding of quantum computing’s potential and limitations, my project could contribute to eventually integrating quantum computers into facility capabilities,” Wang said.

Reflecting on the experience, Wang noted, “Being at Argonne has shown me how national labs approach research and how their approach differs from that of universities.” She added that this perspective helped her clarify her strengths and motivations as she plans her future studies and career.

Advancing physics problem-solving with large language models

Filomela Gerou, a recent physics graduate from the University of Michigan and incoming graduate student there, spent her summer at Argonne working in the Computational Sciences Division’s Cosmology and AI group. Her research focuses on integrating machine learning and AI into physics simulations, with goals of advancing computational physics through innovative models like diffusion models, normalizing flows, and transformer architectures.

Drawn to the opportunity to work at a DOE national laboratory, Gerou joined the AuroraGPT project. Her work focused on developing a domain-specific chain-of-thought large language model (LLM) reasoning benchmarking framework designed to replicate the step-by-step reasoning process physicists use when solving complex problems.

Gerou explained that the project not only benchmarks AuroraGPT consistent with physical sciences research but also provides a sophisticated scoring system that offers insights such as case-by-case LLM feedback, mathematical evaluation, and metadata tracking including latency and token usage. “Scaling this dataset to 10x-15x its current size, while maintaining quality and specification compliance, is within the realm of the Aurora supercomputer,” she said.

Reflecting on her summer, she said, “Not only have I had the opportunity to be a part of the real-time problem-solving meetings within ALCF, but have immediately felt I could contribute to the conversation.”

Looking ahead, Gerou is preparing a paper on her project and will present at an upcoming conference. She plans to continue working at the intersection of computational physics and AI, building on her experience with project architecture, scalable pipelines, and the application of AI to advance physical sciences.

Building virtual training environments for robotic arms

During his summer at the ALCF, Yassir Atlas, a computer science and mathematics student at the University of Illinois Urbana-Champaign, worked on developing virtual training environments for remote-controlled robotic arms used in hot-cell operations. Atlas focused on building these environments to train imitation learning models that automate routine tasks in hot-cell settings.

Maneuvering these robotic arms can be difficult and time-consuming for scientists performing mundane tasks like picking up rods or moving flasks. By automating such tasks through imitation learning, Atlas’s project aims to free scientists to focus on more complex work.

Reflecting on his summer, Atlas said, “My largest takeaway is that research is a series of work and not sparse momentous works. Though project proposals were grand and large, students and mentors were focused on establishing a trajectory of work with steady progress.”

Atlas added that this perspective will make him a more productive researcher and help him clearly identify and communicate engineering problems. “Regardless of whether I pursue industry or research, my experience at ALCF will help me work with clearer intent,” Atlas said.

Learn more about our educational programs

Argonne offers a variety of internships, research appointments, and cooperative education opportunities every year to hundreds of students. To learn more about student opportunities, visit the Educational Programs and Outreach webpage: anl.gov/education/graduate-programs