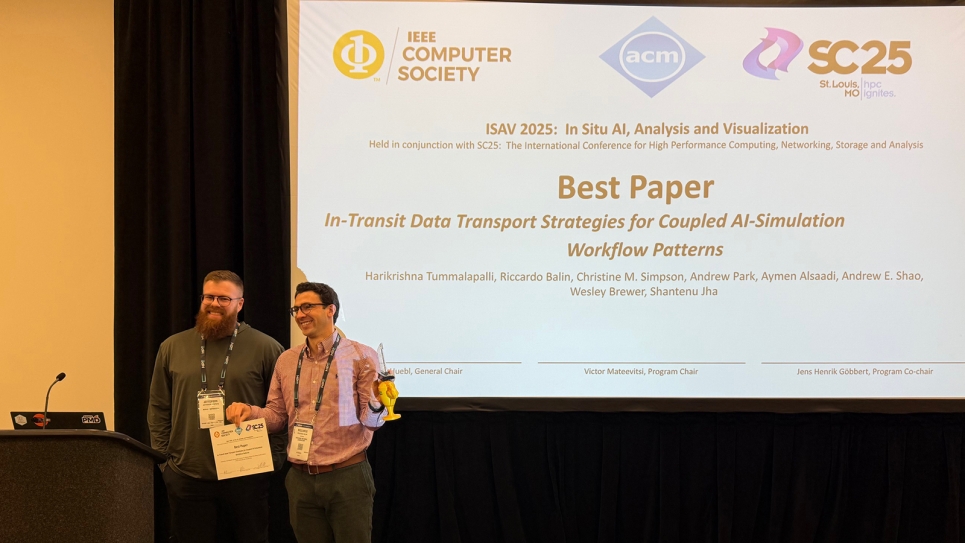

ALCF's Riccardo Balin accepts the Best Paper award at the In Situ Infrastructures for Enabling Extreme-Scale Analysis and Visualization (ISAV) workshop at SC25.

ALCF researchers were awarded Best Paper at ISAV2025 for their development of a new tool for analyzing data transport strategies in coupled AI-simulation workflows.

A team of researchers from the U.S. Department of Energy’s (DOE) Argonne National Laboratory received the Best Paper Award at the In Situ Infrastructures for Enabling Extreme-Scale Analysis and Visualization (ISAV) workshop, held as part of the SC25 conference in November in Saint Louis.

The team’s paper, “In-Transit Data Transport Strategies for Coupled AI-Simulation Workflow Patterns,” introduces a high-performance computing (HPC) workflow analysis tool, SimAI-Bench. The researchers used the tool to develop mini-apps (simplified versions of real scientific workflows) to benchmark difference data transport strategies on the Aurora supercomputer at Argonne Leadership Computing Facility (ALCF). The ALCF is a U.S. DOE Office of Science user facility located at Argonne National Laboratory.

“Coupled AI-simulation workflows are increasingly the standard workflows that users deploy at HPC facilities, so there’s a need for performance analysis and prototyping tools that match these workflows’ increasing complexity,” said Harikrishna Tummalapalli, a postdoctoral researcher at the ALCF and coauthor of the paper. “SimAI-Bench allows users to prototype and evaluate such coupled workflows.”

Tummalapalli’s collaborators on the paper include ALCF colleagues Riccardo Balin and Christine Simpson; Andrew Park, Aymen Alsaadi, and Shantenu Jha of Rutgers University; Hewlett Packard Enterprise’s Andrew E. Shao; and Wesley H. Brewer of DOE’s Oak Ridge National Laboratory.

The team’s study evaluates two workflows common on Aurora: a one-to-one pattern (taken from the nekRS-ML application) and a many-to-one pattern.

“The one-to-one workflow has collocated simulation and AI training instances, and the many-to-one workflow trains a single AI model from an ensemble of simulations,” Tummalapalli said.

“We found that in the one-to-one workflow, node-local and DragonHPC data-staging strategies provide excellent performance,” he noted. “For the many-to-one workflow, we show that data transport is an increasing bottleneck as the ensemble size grows. In such cases, among the strategies we tested, file systems provide the optimal solution.”