As large-scale experiments generate an ever-increasing amount of scientific data, automating research tasks, such as data transfers and analysis, is critical to helping scientists sort through the results to make new discoveries. However, new ways of implementing the automation of research processes are necessary to fully utilize the power of leading-edge computational and data technologies.

A team of researchers at the U.S. Department of Energy’s (DOE) Argonne National Laboratory presented new techniques for automating inter-facility workflows—workflows such as those that involve transferring data obtained from beamlines at Argonne’s Advanced Photon Source (APS) to the Argonne Leadership Computing Facility (ALCF) resources for analysis—by identifying how to express their component processes in abstract terms that make the workflows reusable. The ALCF and APS are U.S. DOE Office of Science user facilities.

Finding the patterns underlying data analysis workflows

Workflows that support experimental research activities largely feature the same general patterns and consist of the same general steps, referred to as paths or flows. Flows can include data collection, reduction, inversion, storage and publication, machine-learning model training, experiment steering, and coupled simulation.

“An example of a typical flow pattern would be to start with acquisition of data and establishment of quality control,” said Rafael Vescovi, an assistant scientist at Argonne. “This is followed by data reconstruction, training machine learning models on data, and cataloging and storing data. Finally, use the refined model to generate predictions.”

Currently, however, the majority of flows are assembled on an ad hoc basis, each built independently of the others to support a particular beamline and specific analysis. Moreover, few flows are able to make effective use of modern supercomputers such as those available at ALCF.

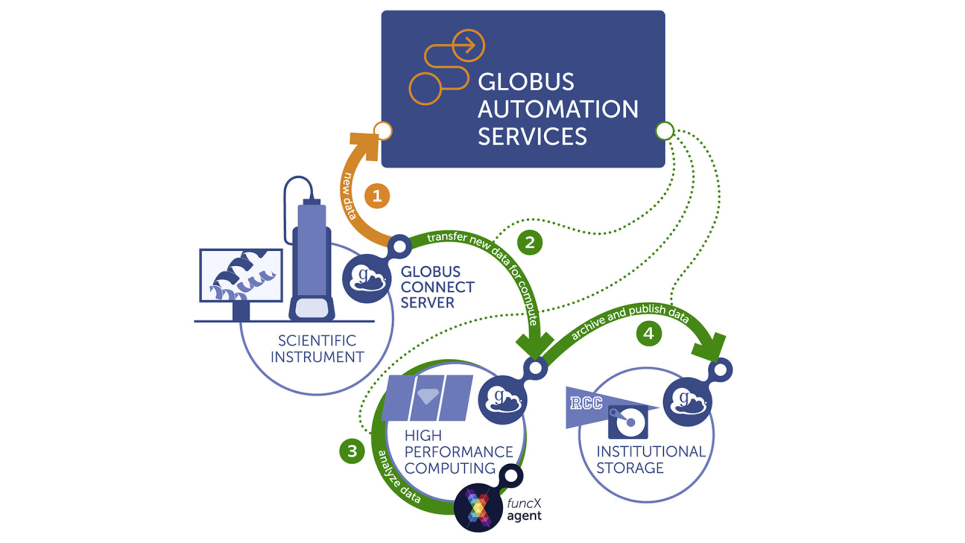

To minimize the duplication of effort such ad hoc approaches necessarily entail, a team of researchers from Argonne and Globus demonstrated, in an article published in Patterns, that Globus automation services (such as Globus Flows) can be used to create a language for expressing workflows that makes them reusable.

Moreover, the Patterns article made clear that many beamline flows are automatable.

The researchers demonstrated flow reusability by abstracting the paths and breaking them down into common components that consist of actions like publish, move data, or add metadata to an index.

“Most things that researchers want to do in this environment, most processes and procedures that they’d execute, can be represented by just a half-dozen or so components, allowing for parameter adjustments,” said Vescovi, the lead author of the article.

Ian Foster, director of Argonne's Data Science and Learning division, co-inventor of Globus, and a co-author of the paper, elaborated: “There are common flows that come up repeatedly, so it makes sense to capture them. In fact, a rather modest number of them underlie a large portion of instrument-facilitated data capture and analysis performed at these facilities.”

“These flows,” he explained, “can be represented in reusable forms that describe a sequence of actions while leaving details such as what the analysis or target instrument is, or which computers or data repositories are involved, unspecified.”

That is, these flows can be abstracted for repeated and distributed use.

As demonstrated in the Patterns article, the workflow established for an experiment can provide a template for other beamline work. Conventionally, the researchers would construct their workflow from scratch, even if this new workflow shared most of its components with existing workflows. But when expressed in the abstract form enabled by the Globus platform, alternative or additional steps easily can be incorporated into the existing workflow.

Applying automation to real experiments

As part of their efforts to automate inter-facility workflows, researchers captured data at multiple APS beamlines, implementing flows for several applications.

In each case the data were transferred from the APS to the ALCF to make use of computational resources. Analyses were then performed quasi-instantaneously, with visualizations loaded into a data portal to enable near-real-time experiment monitoring. Results were also incorporated automatically into a catalog for further exploration (enabling, for example, solution of a sample’s protein structure).

The diversity of the implementation activities demonstrated the general applicability of the described automation method.

Automation was applied to x-ray photon correlation spectroscopy (XPCS), serial synchrotron crystallography (SSX), ptychography, and high-energy diffraction microscopy (HEDM).

XPCS

XPCS is a powerful technique for characterizing the dynamic nature of complex materials over a range of time and length scales. Researchers automated the collection, reduction, and publication of XPCS data at the APS 8-ID beamline across a ten-step flow. Each experiment performed with the automated flow can generate hundreds of thousands of images.

SSX

SSX is a technique in which specialized light-source experiment tools are used to collect high-quality diffraction data from numerous crystals at rates of tens of thousands of images per hour.

Researchers implemented SSX processes via three different flows. The first transfers synchrotron light source-acquired images to a computing facility (i.e., the ALCF) where they are analyzed to identify good quality diffraction images. When at least 1000 such diffraction images have been identified, the second flow uses them to solve the crystal structure, which is subsequently returned back to users at the APS beamline. The final flow organizes and publishes the results.

Ptychography

Researchers developed a three-step flow for ptychography, a coherent diffraction imaging technique capable of imaging samples at fine resolutions. Data are transferred from the experimental facility (i.e., the APS) to the computing facility. At the computing facility diffraction patterns are processed to reconstruct a full representation of the sample. Results are then transferred back to users at the experimental facility. The flow is designed such that hundreds of instances can be run in parallel during a single ptychography experiment.

HEDM

HEDM combines imaging and crystallography data to characterize polycrystalline material microstructures in three dimensions and under various thermo-mechanical conditions.

The Patterns paper presents two distinct HEDM applications for different approaches to HEDM data analysis. The first involves flows for collection, analysis, and storage of far-field and near-field diffraction data and for the coordination of those activities, while the second trains and deploys a neural network approximator. Both are broken down into several multistep flows.

Advancing the laboratory and research

Increased use of automation in research will help bolster the connection between the ALCF and the APS, encouraging more activity from researchers at both facilities. Such researchers include beamline scientists at APS interested in the new methodology, APS software developers, and ALCF staff whose expertise will be needed to leverage computational resources for performing calculations in near real time and to provide feedback to help inform scientists’ experimental choices as they make them.

“ALCF expertise will only continue to become more important after the APS upgrade,” observed Nicholas Schwarz, lead for scientific software and data management at the APS, and a co-author on the paper. The upgrade will introduce many new beamlines to the facility and significantly enhance existing instruments. One consequence of the upgrade will be a multiple order-of-magnitude increase in the facility’s data generation rate, in turn requiring much greater computational power for analysis and experiment steering.

“Contemporary data-intensive analysis methods can already easily overwhelm all but the most specialized high-performance computing systems,” Schwarz noted, “so increased automation of our data-acquisition and processing capabilities will only further the need for ALCF expertise.”

Ben Blaiszik, a researcher at Argonne and the University of Chicago and a co-author of the Patterns article, highlighted the potential to make research more efficient.

“There’s another aspect to this development, and that’s the extent to which it represents a change in the way that the workflow influences the science that’s performed,” Blaiszik explained. “Historically, after collecting data, a researcher would need to figure out how to transfer it to the ALCF or a similar computing facility for analysis. Identification of erroneous data is likely in such cases to occur only after the experiment is complete, which can necessitate a large replication of work. With the detection that results from automation and real-time analysis, conclusive results can be obtained much faster.”

Moreover, he observed, workflow automation benefits science by enabling users to capture more data every step of the way, which ultimately means that it enables the collection of both higher quality data and greater quantities of data.

==========

The Argonne Leadership Computing Facility provides supercomputing capabilities to the scientific and engineering community to advance fundamental discovery and understanding in a broad range of disciplines. Supported by the U.S. Department of Energy’s (DOE’s) Office of Science, Advanced Scientific Computing Research (ASCR) program, the ALCF is one of two DOE Leadership Computing Facilities in the nation dedicated to open science.

About the Advanced Photon Source

The U. S. Department of Energy Office of Science’s Advanced Photon Source (APS) at Argonne National Laboratory is one of the world’s most productive X-ray light source facilities. The APS provides high-brightness X-ray beams to a diverse community of researchers in materials science, chemistry, condensed matter physics, the life and environmental sciences, and applied research. These X-rays are ideally suited for explorations of materials and biological structures; elemental distribution; chemical, magnetic, electronic states; and a wide range of technologically important engineering systems from batteries to fuel injector sprays, all of which are the foundations of our nation’s economic, technological, and physical well-being. Each year, more than 5,000 researchers use the APS to produce over 2,000 publications detailing impactful discoveries, and solve more vital biological protein structures than users of any other X-ray light source research facility. APS scientists and engineers innovate technology that is at the heart of advancing accelerator and light-source operations. This includes the insertion devices that produce extreme-brightness X-rays prized by researchers, lenses that focus the X-rays down to a few nanometers, instrumentation that maximizes the way the X-rays interact with samples being studied, and software that gathers and manages the massive quantity of data resulting from discovery research at the APS.

This research used resources of the Advanced Photon Source, a U.S. DOE Office of Science User Facility operated for the DOE Office of Science by Argonne National Laboratory under Contract No. DE-AC02-06CH11357.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation's first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America's scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy's Office of Science.

The U.S. Department of Energy's Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science