Aurora software development: Bringing the OCCA open-source library to exascale

In this series, we examine the range of activities and collaborations that ALCF staff undertake to guide the facility and its users into the next era of scientific computing.

Kris Rowe, a computational scientist at the Argonne Leadership Computing Facility (ALCF) is leading collaborative efforts to bring OCCA—an open-source, vendor-neutral framework and library for parallel programming on diverse architectures—to Aurora, the ALCF’s forthcoming exascale system. The ALCF is a U.S. Department of Energy (DOE) Office of Science User Facility located at Argonne National Laboratory.

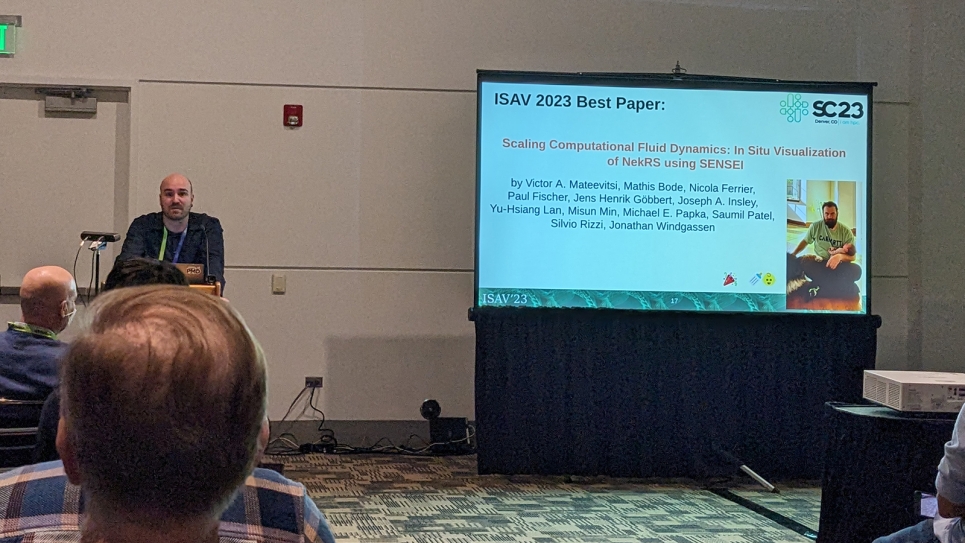

Mission-critical computational science and engineering applications from the DOE and private industry rely on OCCA, which provides developers with transparency in the generation of raw backend code, as well as just-in-time compilation that permits SYCL/DPC++-compatible kernel-caching. NekRS, for example—a new computational fluid dynamics solver from the Nek5000 developers—is used simulate coolant flow inside of small modular reactors and design more efficient combustion engines.

Multiple components

Developed for exascale with the support of DOE’s Exascale Computing Project, OCCA, which encourages portability, consists of several independent components that can be used individually or in combination: the OCCA API and runtime, the OCCA kernel language (OKL), and the OCCA command line tool. The framework supports applications written in C, C++, and Fortran.

The OCCA API provides developers with unified models common to other programming frameworks, while the runtime provides several backends (DPC++, CUDA, HIP, OpenMP, OpenCL, and Metal) that implement the API as a set of lightweight wrappers. It also provides a DPC++-friendly Fortran interface as an alternative to OpenMP.

OKL enables the creation of portable device kernels using a directive-based extension to the C-language. During runtime, the OCCA Jitter translates OKL code into the programming language of the user’s chosen backend, eventually generating device binary using the chosen backend stack. Alternatively, kernels can be written directly as backend-specific code, such as OpenCL or CUDA.

The implementation of OCCA deployed on the Oak Ridge Leadership Computing Facility’s Summit machine has a CUDA backend; the codes run through it are translated into CUDA for performance optimization, as well as to provide—by way of raw transparency—clarity for debugging.

Collaboration drives development

Utilizing OpenMP and DPC++, Rowe worked with Saumil Patel of Argonne’s Computational Sciences Division to establish initial benchmarks for various types of kernels before beginning development of the SYCL/DPC++ OCCA backend. Development has occurred in collaboration with Intel engineers who are part of a co-design project with Royal Dutch Shell, which employs OCCA for seismic-imaging applications. Performance analysis is completed via the Intel tools Advisor and VTune Profiler.

The partnership is ongoing. At present Rowe and Patel meet biweekly with the Intel team to coordinate testing and performance aspects, and to drive the development of new features.

The Intel Center of Excellence (COE) played a central role in bringing the two halves of this project team together. Moreover, colocation of the COE at Argonne facilitates more deeply integrated collaborations which, while focusing and centralizing strategy (and reducing various bottlenecks and other communication gaps), also helps minimize duplicated efforts.

Programming models used by the OCCA backends continually evolve. Currently Rowe is surveying the latest DPC++, OpenMP, CUDA, and HIP specifications to identify common performance critical features—such as asynchronous memory transfers and work-group collectives—which have yet to propagate into the OCCA. Using this information, the team will propose extensions to the existing OCCA API for inclusion in future releases of the framework.