From cells to the cosmos: the research campaigns already underway on Aurora

Throughout the process of stress-testing, stabilizing, and optimizing the U.S. Department of Energy’s (DOE) Argonne National Laboratory’s Aurora exascale supercomputer for full deployment, research teams have been working to develop and optimize codes for use on the system.

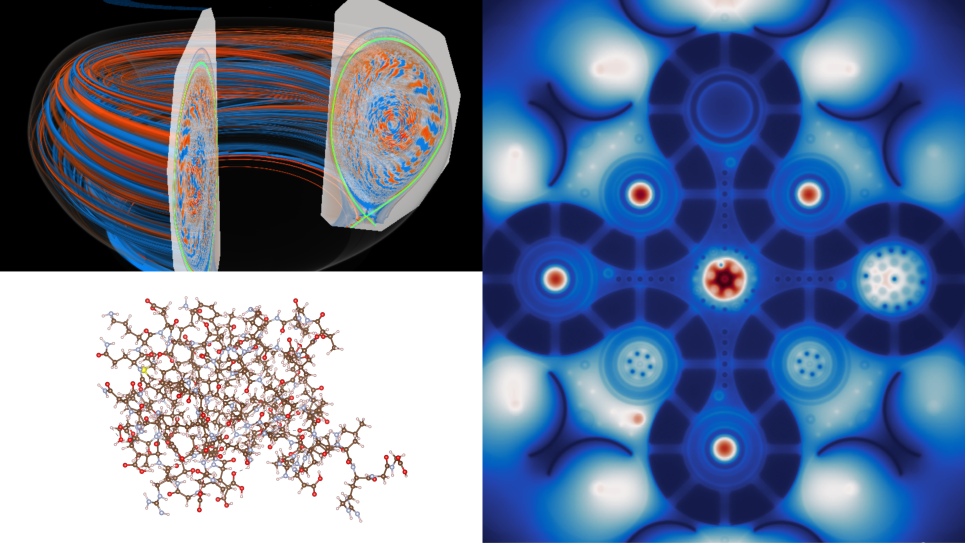

A wide range of programming models, languages, and applications are already running well on Aurora, representing a diversity of disciplines and research methods, from leading-edge brain science and drug discovery to next-generation fusion and particle physics simulations. These projects are offering a glimpse at the supercomputer’s vast capabilities.

Housed at the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science user facility at Argonne, Aurora is one of the fastest supercomputers in the world. The system was built in partnership with Intel and Hewlett Packard Enterprise (HPE).

Below we take a look at some of the early research campaigns being carried out on the system.

AI-Guided Brain Mapping: Large-Scale Connectomics

The structure of the human brain is enormously complex and not well understood. Its 80 billion neurons, each connected to as many as 10,000 other neurons, support activities from sustaining vital life processes to defining who we are. From high-resolution electron microscopy images of brain tissue, computer vision, and machine learning techniques operating at exascale can reveal the morphology and connectivity of neurons in brain tissue samples, helping to advance our understanding of the structure and function of mammalian brains.

With the arrival of Aurora, scientists are leveraging innovations in imaging, supercomputing and artificial intelligence to advance connectomics research, which is focused on mapping the neural connections in the brain. The team’s techniques will enable computing to scale from cubic millimeters of brain tissue today, to a cubic centimeter whole mouse brain, and to larger volumes of brain tissue in the future.

Deep Learning-Enhanced High-Energy Particle Physics at Scale: CosmicTagger

Researchers in high-energy particle physics are working to develop improved methods for detecting neutrino interactions and distinguishing them from other cosmic particles and background noise in a crowded environment. Deep learning methods, such as the CosmicTagger application, offer powerful tools for advancing neutrino physics research. Now running on Aurora, CosmicTagger is a deep learning-guided computer vision model featuring high-resolution imaging data and corresponding segmentation labels originating from high-energy neutrino physics experiments.

A key benchmark for high-performance computing systems, CosmicTagger aims to differentiate and classify each pixel to separate cosmic pixels, background pixels, and neutrino pixels in a neutrino dataset. The application, which is enabling substantially improved background particle rejection compared to classical techniques, will aid in the analysis of large-scale datasets generated by upcoming neutrino experiments.

Accelerating Drug Design and Discovery with Machine Learning

High-throughput screening of massive datasets of chemical compounds to identify therapeutically advantageous properties is a promising step in the treatment of diseases like cancer, as well as for response to epidemics like SARS-CoV-2. However, traditional structural approaches for assessing binding affinity, such as free energy methods or molecular docking, pose significant computational bottlenecks when dealing with quantities of data of this magnitude.

Using Aurora, researchers are leveraging machine learning to screen extensive compound databases for molecular properties that could prove useful for developing new drugs. Aurora’s computing power and AI capabilities will make it possible to screen 40 to 60 billion candidate compounds per hour for potential synthesis. A key future direction for the team’s workflow involves integrating de-novo drug design, enabling the researchers to scale their efforts to explore the limits of synthesizable compounds within the chemical space.

Using Quantum Simulations to Unlock the Secrets of Molecular Science: GAMESS

Researchers in computational chemistry require means to carry out demanding tasks like computing the energies and reaction pathways of catalysis processes. Designed to help solve such problems using high-fidelity quantum simulations, GAMESS, or General Atomic and Molecular Electronic Structure System, is a general-purpose electronic structure code for computational chemistry. The code has been rewritten for next-generation exascale systems, including Aurora.

Using a well-defined heterogeneous catalysis problem to prepare for Aurora, GAMESS has demonstrated advanced capabilities for modeling complex physical systems that involve chemical interactions with many thousands of atoms.

Probing the Universe with Machine Learning-Enhanced, Extreme-Scale Cosmological Simulations: CRK- HACC

Cosmology currently poses some of the deepest questions in physics about the nature of gravity and matter. The analysis of observations from large sky surveys requires detailed simulations of structure formation in the universe. The simulations must cover vast length scales, from small dwarf galaxies to galaxy clusters (the largest bound objects in the universe). At the same time, the physical modeling must be sufficiently accurate on the relevant scales—gravity dominating on large scales, and baryonic physics becoming important on small scales.

The Hardware/Hybrid Accelerated Cosmology Code (HACC) is a cosmological N-body and hydrodynamics simulation code designed to run at extreme scales. HACC computes the complicated emergence of structure in the universe across cosmological history. The core of the code’s functionality consists of gravitational calculations along with the more recent addition of gas dynamics and astrophysical subgrid models.

HACC simulations have been performed on Aurora in runs using as many as 1,920 nodes, and visualizations of results generated on the system illustrate the large-scale structure of the universe. Deploying the HACC code at exascale will help deepen our understanding of the structure of the universe and its underlying physics.

High-Performance Computational Chemistry at the Quantum Level: NWChemEx

A primary goal of biofuels research is to develop fuels that can be distributed by using existing infrastructure and to replace traditional fuels on a gallon-for-gallon basis. However, producing high-quality biofuels in a sustainable and economically competitive way presents significant technical challenges. Two such challenges are designing feedstock to efficiently produce biomass and developing new catalysts to efficiently convert biomass-derived intermediates into biofuels.

The NWChemEx project, which includes quantum chemical and molecular dynamics functionality, has the potential to accelerate the development of stress-resistant biomass feedstock and the development of energy-efficient catalytic processes for producing biofuels. Additionally, it aims to address a wealth of other pressing challenges at the forefront of molecular modeling, including development of next-generation batteries, the design of new functional materials, and the simulation of combustive chemical processes.

Simulations using NWChemEx, a ground-up rewrite of the ab initio computational chemistry software package NWChem, have been run on Aurora across hundreds of nodes.

High-Fidelity Monte Carlo Neutron and Photon Transport Simulations: OpenMC

Researchers aim to harness the power of exascale computing to model the entire core of a nuclear reactor, generating virtual reactor simulation datasets with high-fidelity, coupled physics models for reactor phenomena that are truly predictive. To this end they will employ OpenMC, a Monte Carlo neutron and photon transport simulation code that simulates the stochastic motion of neutral particles. Models used in OpenMC simulations can range in complexity from a simple slab of radiation-shielding material to a full-scale nuclear reactor.

Originally written for central processing unit- (CPU-) based computing systems, OpenMC has been rewritten for graphics processing unit- (GPU-) oriented machines, including Aurora. The extreme performance gains OpenMC has achieved on GPUs are finally bringing within reach a much larger class of problems that historically were deemed too expensive to simulate using Monte Carlo methods.

Large-Scale Multiphysics Magnetic Fusion Reactor Simulations: XGC

DOE supercomputers play a key role in fusion research, which heavily depends on computationally intensive, high-fidelity simulations. To advance fusion research on Aurora, scientists are preparing the X-Point Gyrokinetic Code( XGC) to perform large-scale simulations of fusion edge plasmas. XGC is being developed in tandem with the Whole Device Model Application project—which aims to build a high-fidelity model of magnetically confined fusion plasmas to help plan research at the ITER fusion experiment in France.

Specializing in edge physics and realistic geometry, XGC is capable of solving boundary multiscale plasma problems across the magnetic separatrix (that is, the boundary between the magnetically confined and unconfined plasmas) and in contact with a material wall called a divertor, using first-principles-based kinetic equations. Aurora will enable a much larger and more realistic range of dimensionless plasma parameters than ever before, with the core and the edge plasma strongly coupled at a fundamental kinetic level based on the gyrokinetic equations.