Preparing a cosmological simulation code for exascale systems

As part of a new series aimed at sharing best practices in preparing applications for Aurora, we highlight researchers' efforts to optimize codes to run efficiently on graphics processing units.

LET’S TALK EXASCALE CODE DEVELOPMENT: HACC

The focus of this episode is on a cosmological code called HACC, and joining us to discuss it are Nicholas Frontiere, Steve Rangel, Michael Buehlmann, and JD Emberson of DOE’s Argonne National Laboratory.

[Listen Now]

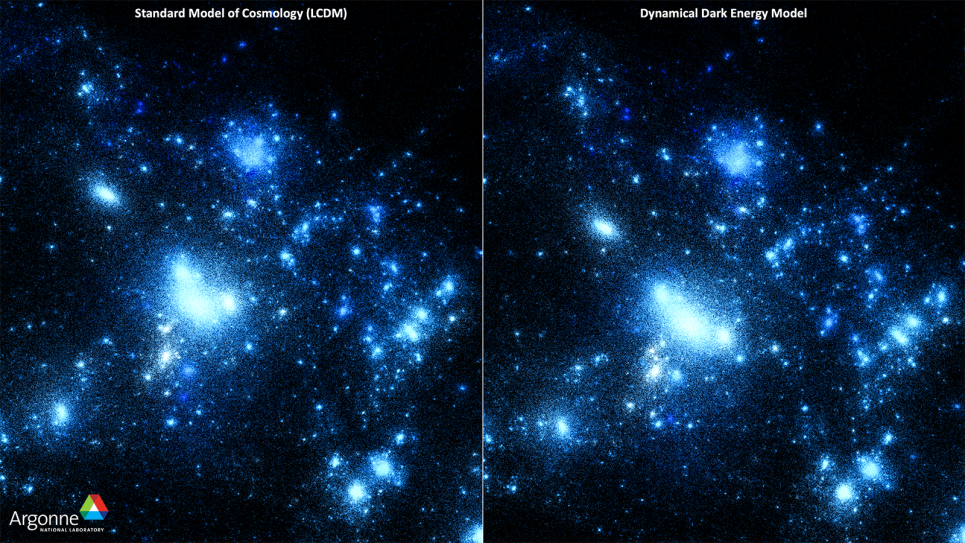

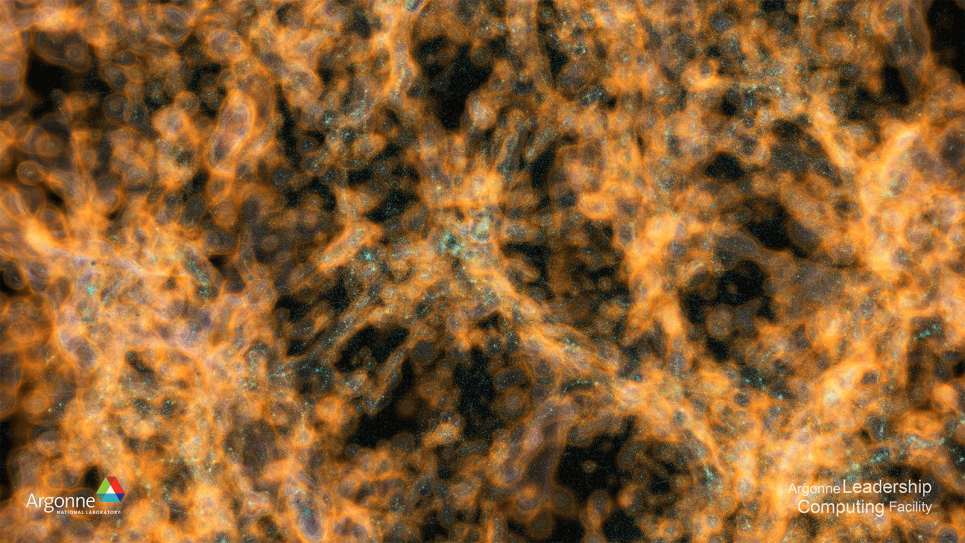

HACC (Hardware/Hybrid Accelerated Cosmology Code) is a cosmological N-body and hydrodynamics simulation code designed to run at extreme scales on all of high-performance computing (HPC) systems operated by the U.S. Department of Energy’s (DOE) national laboratories. HACC computes the complicated emergence of structure in the universe across cosmological history, the core of the code’s functionality consisting of gravitational calculations along with the more recent addition of gas dynamics and astrophysical subgrid models. With the exascale era of computing imminent, there are new machines for which HACC must be prepared. At the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science User Facility, this means getting HACC ready for the forthcoming Aurora system.

To this end, a team of collaborators from two directorates and multiple divisions at DOE's Argonne National Laboratory is working to ensure that HACC is performant on Day One following the arrival of Aurora, whose computational capabilities will be derived in part from the power of graphics processing units (GPUs). HACC has a long history of running on GPU-based machines and on accelerated machines in general, thus providing solid groundwork for the current efforts to prepare for Aurora.

In order to be ready to run on multiple platforms, some degree of code portability is essential, without significant loss of performance. Performance portability can mean different things to different development teams. HACC is designed to emphasize performance over strict portability. While some key, computationally intensive sections of HACC must be rewritten for each new machine, its minimal library-dependence and the successful separation of communications kernels from compute-bound kernels allow it to quickly achieve optimal performance on most architectures. Because HACC simulations are often run on systems as soon as they are accessible through early access programs, the design avoids reliance on backend libraries or programming models that may not be available or fully optimized. Indeed, it is because of HACC’s minimal dependencies—MPI and a C and C++ compiler—that the goal of running on every major DOE production machine is even feasible.

HACC is structured to remain mostly consistent across different architectures such that it requires only limited changes when ported to new hardware; the inter-nodal level of code—the level of code that communicates between nodes—is nearly invariant from machine to machine.

Consequently, the approach taken to porting HACC essentially reduces the problem to the node level, thereby permitting concentration of effort on optimizing critical code components with a full awareness of the actual hardware. Of course, not all applications can afford this strategy—in many cases, the computation and communication burden cannot be as efficiently separated and localized.

In addition, HACC’s structure promotes experimentation with different programming models in key computational kernels. In the case of Aurora, a machine with Intel GPUs, the HACC team decided to test several programming models for limited, computationally intensive sections of the code, including assessing multiple approaches to the development of Data Parallel C++ (DPC++). While doing so, the team also runs the code in its entirety on test problems. A primary advantage of being able to do this (as opposed to, say, running a microkernel or mini-application) is that the developers obtain a clear perspective of how the entire application is functioning and have the opportunity discover problem areas that may otherwise not be detected until much later. The approach does come with the tradeoff of added complexity, which needs to be managed.

Versions of HACC being developed for the Oak Ridge Leadership Computing Facility’s Summit machine and future exascale systems incorporate basic gas physics (hydrodynamics) to enable more detailed studies of structure formation on the scales of galaxy clusters and individual galaxies. These hydrodynamical versions also include sub-grid models that integrate phenomena like star formation and supernova feedback. The addition of these baryonic physics components means the addition of more performance-critical code sections; these sections will be offloaded to run on the GPUs. A GPU implementation of HACC with hydrodynamics was previously developed for the Summit system using CUDA. All the GPU versions of the code will be rewritten to target Aurora.

All HACC ports begin with the gravity-only version, focused only on calculating gravitational forces in an expanding universe: while other interactions become negligible with distance, gravitational fields are long-range and must always be calculated. The gravity-only variants are easier to work with than those with additional physics, therefore they are always the starting point when retooling the code. For the Aurora port, the already existing and highly performant OpenCL version of the code was a boon to the developers. In the initial setup, an OpenCL version of the code runs on Intel GPU hardware capable of verifying answers and evaluating performance. Because it can verify correct functionality, calculations, and expected levels of performance, this setup is used as a benchmark when comparing different programming models.

Focusing on the gravity-only kernels, the HACC team is studying two potential routes to a DPC++ version of the code. One is simply writing new DPC++ code by hand. The other is running the CUDA version of the code through a translation tool and evaluating the resulting DPC++. While this comparison process will not be undertaken for every HACC component, it can help inform the approach to porting the hydrodynamics kernels: in addition to being able to deduce the level of complexity the translation tool can handle while still delivering performant code, the team can place the handwritten kernel side-by-side with the translated one to learn which hydrodynamics kernels might be machine-translatable, as well as identifying code structures that agree best with such automation. Relatedly, past experiments with different programming models of the code’s gravity section effectively function as prototypes for the remaining hydrodynamic components; the various pros and cons of each can be analyzed and compared and contrasted, enabling the team to narrow in on the optimal construction and implementation of the gravity kernel. As the DPC++ approach to the gravity kernels is already under sufficient control, the team is now working on the hydrodynamics kernels.