Researchers Describe Project to Merge Cloud Computing and Supercomputing

Using a Director’s Discretionary allocation at the Argonne Leadership Computing Facility (ALCF), researchers from North Carolina State University recently completed a project that successfully merged a cloud computing environment with a supercomputer. Here, project leads Patrick Dreher and Mladen Vouk discuss their work at the ALCF.

The ALCF’s Director’s Discretionary (DD) program presented us with a unique opportunity to use a Blue Gene/P machine to research, explore, and develop the concept of embedding a distributed cloud computing architecture within a supercomputer. The goal of our DD project was to demonstrate a proof-of-concept capability for this novel high-performance computing (HPC) environment.

The “traditional” cloud design approach often starts with a relatively loosely coupled X86-based architecture. Then, if there is an interest in HPC, a cloud computing service can be added and adapted to HPC computational needs and requirements. More often than not, such a service is based on virtual machines and is used by distributed applications that are not sensitive to the latency requirements for HPC applications.

The key idea for our research project was to extend the reverse approach already in use in some implementations of the Virtual Computing Laboratory (VCL). Essentially, we wanted to start with an HPC-capable architecture and enable it to host a cloud so that its native HPC functions were preserved. We leveraged the supercomputer’s hardware architecture as the foundation infrastructure upon which to build a software-defined system capable of embedding a cloud computing environment that could both exploit and virtualize the existing properties of the host’s hardware architecture. This novel methodology has the potential to be applied toward complex mixed-load workflow implementations, data-flow oriented workloads, as well as experimentation with new schedulers and operating systems within an HPC environment.

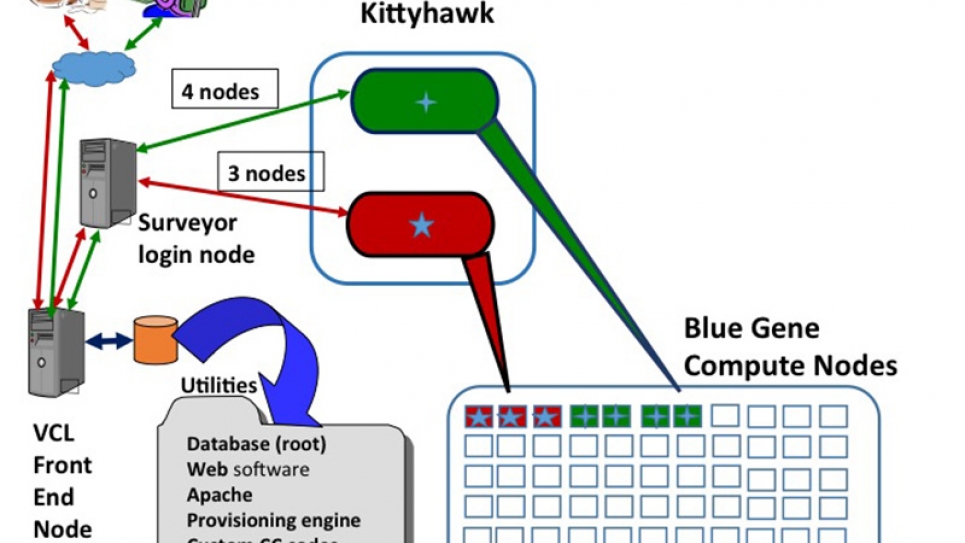

In order to carry out this research program, we needed to identify both a suitable supercomputer and a cloud computing system. For the supercomputer platform, we selected Surveyor, an IBM Blue Gene/P system at the ALCF. This machine provided a non-production, test and development platform with 1,024 quad-core nodes (4,096 processors) and 2 terabytes of memory, delivering a peak performance of 13.9 teraflops. Surveyor also offered access to a software utility package called Kittyhawk. The Kittyhawk system is an open source software utility that was originally developed as part of a project sponsored by IBM Research. This tool acts as a provisioning engine with basic network, control, and communications components, which allowed us to explore the construction of a next-generation platform capable of hosting many simultaneous web-scale workloads. In addition, Kittyhawk provided a group of basic low-level computing services within a Blue Gene/P system. This enabled both customized allocation and interconnection of the computing resources upon which additional higher levels of applications and services could be installed using standard open-source software. In principle, this could support an option for isolation of the embedded cloud from the rest of the environment.

Using the original basic building blocks of Kittyhawk, our project leveraged the direct access to the network and communication hardware within Surveyor in a way that allowed us to construct an embedded elastic cloud computing infrastructure within the supercomputer. For the cloud computing system, we used the VCL cloud computing software system originally designed and developed at NC State University. We chose VCL as the test platform because it was an open source cloud computing system with an award-winning, production-level track record as a distributed cloud computing system. The full VCL cloud and resource management system consists of a full range of service capabilities – from Hardware as a Service (HaaS) to Infrastructure as a Service (IaaS), Platform as a Service (PaaS), Software as a Service (SaaS), and the complex Everything as a Service (XaaS) options. Its robust cloud architecture includes a graphical user interface, an application programming interface, authentication plug-in mechanisms, on-demand resource management and scheduling, bare-machine and virtualized machine provisioning, provenance engine, a sophisticated role-based resource access, and license and scheduling options.

With these resources and support provided by ALCF staff, we were able to successfully demonstrate a proof-of-concept implementation that showed a fully functioning production cloud computing environment can be completely embedded within a supercomputer to take advantage of the platform’s HPC hardware infrastructure. This type of design automatically takes advantage of the installed high-speed, low-latency interconnects among the processors. The ability to completely embed the cloud computing system within the supercomputer capitalized on the localized homogeneous and uniform HPC supercomputer architecture usually not found in generic cloud computing clusters. This proof-of-concept demonstration has the potential to provide an option to perform traditional HPC simulations, but also allows for the possibility of composing sophisticated in situ hybrid workflows that involve both HPC and non-HPC analytics, as well as data-flow orientation. This leverages the supercomputer’s ability to deliver computational power in a mixed mode. The proof-of-concept implementation also has the potential to be scalable to other supercomputers that have the Kittyhawk utility installed. The project team is continuing their work hoping to expand this novel computing environment to other HPC platforms.