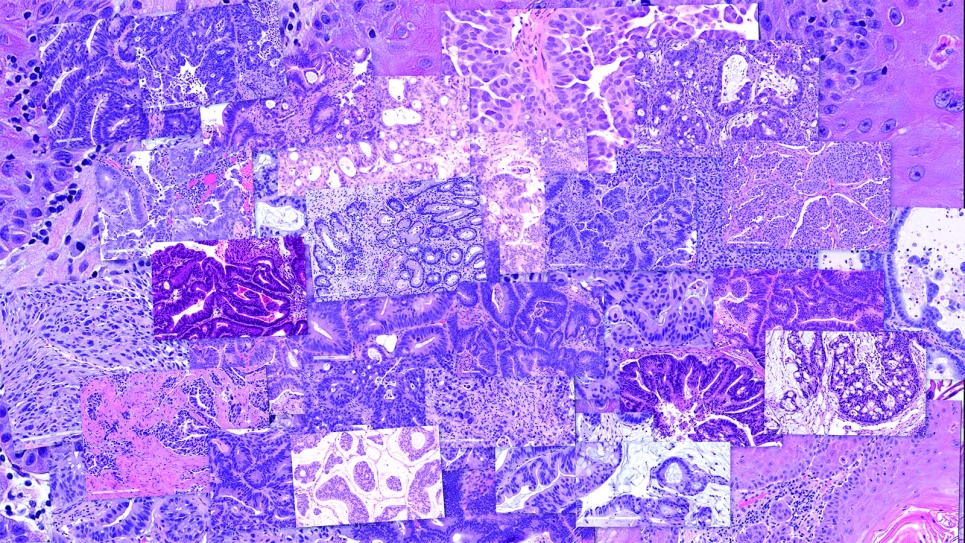

Predicting cancer type and drug response using histopathology images from the National Cancer Institute’s Patient-Derived Models Repository. Image: Rick Stevens, Argonne National Laboratory.

Cancer continues to represent a leading cause of death globally, accounting for some 10 million deaths every year.

The U.S. Department of Energy (DOE) leverages its leadership computing resources—including those at the Argonne Leadership Computing Facility (ALCF), a U.S. DOE Office of Science user facility—to accelerate the research and development of effective cancer treatments. Limited data and a proliferation of deep learning models without standard benchmarking, however, can restrict the extent to which state-of-the-art artificial intelligence (AI) technologies can be used in such research.

To overcome these challenges, DOE and the National Cancer Institute (NCI) fund the IMPROVE (Innovative Methodologies and New Data for Predictive Oncology Model Evaluation) project, which aims to collect relevant drug-response prediction models. The IMPROVE team has transitioned away from developing deep learning models and toward curating them, and is also building a framework for comparing the models while also generating high-quality data.

To demonstrate the framework, the IMPROVE team is using models that predict how cancer cells respond to treatment with known or unknown small molecules.

“So-called ‘small molecules,’ once granted FDA approval, are cancer therapeutics, usually in the form of chemotherapy,” explained Thomas Brettin, strategic program manager at Argonne and a principal investigator on the IMPROVE project.

“We’re evaluating the models and data available in the community, and then curating the community’s models and data,” Brettin said. “We’re developing a framework to run on ALCF resources—Polaris and Aurora.” Aurora is the ALCF’s graphics processing unit- (GPU-) powered exascale supercomputer.

Model curation: precision medicine

The first branch of the IMPROVE project focuses on precision medicine—methods that tailor medical decisions, practices, and treatments to individual patients, based on each patient’s predicted response.

“Our goal here is to enable oncologists to use models that recommend treatment based on a genetic, protein, or other molecular profile that can be generated from a biopsy,” Brettin explained. “So to get to that point, we need to develop protocols for comparing deep learning models of response to cancer therapeutics and to identify which elements, components, and properties contribute to prediction performance.”

This means being able to quickly and accurately compare models and assess which are performing best in as fair and biologically relevant a manner as possible.

“There are currently as many as 80 or 90 different drug response prediction models circulating throughout the research community,” he said. “Despite their proliferation, there are no benchmarks and no standard by which to compare the models to each other.”

ALCF supercomputing resources are used to optimize hyperparameters, the variables that control the models’ learning process; optimization is accomplished by running large ensembles of models.

“The aim is to train a large ensemble of models and to examine how well each model performs on specific drugs and how well each model performs on specific cell lines,” Brettin said. (Cell lines are cell cultures suitable for repeated propagation, with existing screening data available.)

Once the researchers have identified the best settings for training the models, they perform another type of experiment. This is to determine how well models trained on a dataset from one organization (where they were generated experimentally) perform when applied to data from a different organization.

“We’d presume that the different organizations would have different protocols, but each would be generating the same output, which is data describing how a tumor grows when exposed to a particular drug or drug combination,” he explained. “We call this a cross-study generalization analysis. This kind of analysis helps identify bias in the models, which is crucial for their improvement.”

Moreover, the IMPROVE researchers want to fully automate the comparison of models and assessment of performance impacts resulting from training and validation choices.

“What we are trying to build is an automated framework that enables massive cross-comparisons of thousands and tens of thousands of models at once,” Brettin said.

Data generation and discovery of new treatments

“The second branch of the IMPROVE project aims to improve the predictive performance of the deep learning models,” Brettin said. “Identifying compounds and tumor cells whose features suggest they improve the model’s predictive performance, and ultimately make the models more useful for suggesting compounds that can inhibit tumor growth.”

Predicting compounds that inhibit tumor growth is only the beginning. Such compounds would also have to be tested for toxicity and synthesizability.

“Can the compound be synthesized? Because these models we’re looking at are essentially virtual stick drawings. Can the compound be administered at a therapeutic dose? These are all computational questions,” he explained.

Argonne researchers have already used ALCF resources while working to answer the toxicity question.

“Additional ongoing research helps us predict whether a small molecule will be toxic to the heart or liver, or if it will cause birth defects, and other such critical impacts. If we can generate what we refer to as property models, then we can use them in a reinforcement-learning context,” Brettin said.

“That is, suppose an AI generative model is used to generate new molecules,” he continued. “We want to be able to immediately evaluate those molecules for their synthesizability, their toxicity, and any other relevant property, and then feed that data back into the generative process so that the molecules being generated for testing are less likely to be toxic and more likely to be synthesizable, and so on.”

“It’s also important to determine whether therapeutic doses would even be deliverable,” he continued. “In what amount does the molecule inhibit tumor growth? You can’t deliver somebody a shovel’s worth of powder.”

To this end, Argonne researchers aim to develop protocols for designing drug and therapeutic experiments that can be used to generate data to refine the performance of deep learning models.

Data include RNA and DNA sequences of cancer models (themselves an assortment of patient-derived organoids, xenograft organoids, and primary cell lines), in addition to drug screening and response data continuously curated and standardized from the public domain. Organoids are lab-grown, three-dimensional cell structures that mimic the architecture and function of real organs, providing a valuable model for studying disease and drug responses.

“We’re doing these runs multiple times to get variation between deep learning models that have been trained slightly differently so as to understand if a model predicts that such and such drug will inhibit at, say, the 50 percent level,” Brettin said. “We are trying to ask, how consistent is it? That is, if we train the model today, how will its score be affected if we randomize the data a small amount while retaining the same test object, the same specific drug?

And we train it again and run it again so that we’re able to understand the uncertainty in the model—the variability in the prediction—as a function of a specific drug, or as a function of a specific cell line cancer type, or as a function of the neural network architecture,” he continued. “Those runs involve training thousands to tens of thousands of models—which, again, would be impossible without DOE’s leadership computing resources.”